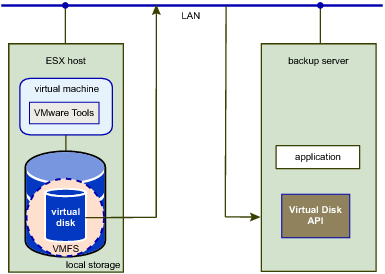

The virtual disk library reads virtual disk data from /vmfs/volumes on ESX/ESXi hosts, or from the local file system on hosted products. This file access method is built into VixDiskLib, so it is always available on local storage. However it is not a network transport method, and is seldom used for vSphere backup.

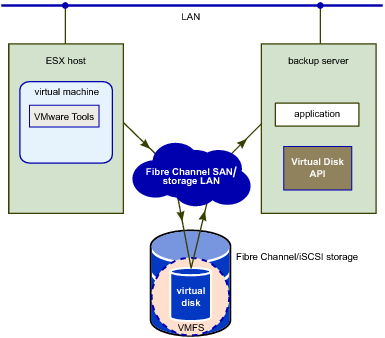

SAN mode requires applications to run on a backup server with access to SAN storage (Fibre Channel, iSCSI, or SAS connected) containing the virtual disks to be accessed. As shown in SAN Transport Mode for Virtual Disk, this method is efficient because no data needs to be transferred through the production ESX/ESXi host. A SAN backup proxy must be a physical machine. If it has optical media or tape drive connected, backups can be made entirely LAN-free.

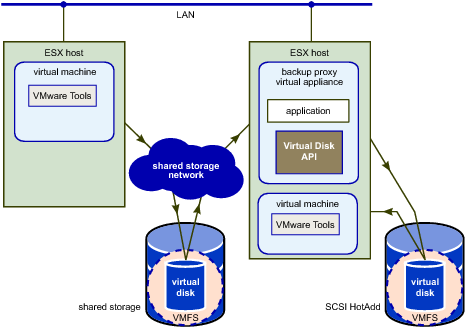

HotAdd is a good way to get virtual disk data from a virtual machine to a backup appliance (or backup proxy) for sending to the media server. The attached HotAdd disk is shown in HotAdd Transport Mode for Virtual Disk.

If the HotAdd proxy is a virtual machine that resides on a VMFS-3 volume, choose a volume with block size appropriate for the maximum virtual disk size of virtual machines that customers want to back up, as shown in VMFS-3 Block Size for HotAdd Backup Proxy. This caveat does not apply to VMFS-5 volumes, which always have 1MB file block size.

On Windows, the keys shown in Windows Registry Keys for VDDK are required at the following Windows registry path:

To support registry redirection, registry entries needed by VDDK on 64-bit Windows must be placed under registry path Wow6432Node. This is the correct location for both 32-bit and 64-bit binaries on 64-bit Windows.

On Linux, SSL certificate verification requires the use of thumbprints – there is no mechanism to validate an SSL certificate without a thumbprint. On vSphere the thumbprint is a hash obtained from a trusted source such as vCenter Server, and passed in the SSLVerifyParam structure from the NFC ticket. If you add the following line to the VixDiskLib_InitEx configuration file, Linux virtual machines will check the SSL thumbprint:

The following library functions enforce SSL thumbprint on Linux: InitEx, PrepareForAccess, EndAccess, GetNfcTicket, and the GetRpcConnection interface that is used by the advanced transports.

NBD employs the VMware network file copy (NFC) protocol. NFC Session Connection Limits shows limits on the number of network connections for various host types. VixDiskLib_Open() uses one connection for every virtual disk that it accesses on an ESX/ESXi host. VixDiskLib_Clone() also requires a connection. It is not possible to share a connection across disks. These are host limits, not per process limits, and do not apply to SAN or HotAdd.